Explaining model predictions with Shapley values - Random Forest

Contents

Explaining model predictions with Shapley values - Random Forest#

Shapley values provide an estimate of how much any particular feature influences the model decision. When Shapley values are averaged they provide a measure of the overall influence of a feature.

Shapley values may be used across model types, and so provide a model-agnostic measure of a feature’s influence. This means that the influence of features may be compared across model types, and it allows black box models like neural networks to be explained, at least in part.

Here we will demonstrate Shapley values with random forests.

For more on Shapley values in general see Chris Molner’s excellent book chapter:

https://christophm.github.io/interpretable-ml-book/shapley.html

The shap package is installed if you have used the Titanic environment yasml file, but otherwise may be installed with pip install shap.

More information on the shap library, inclusiong lots of useful examples may be found at: https://shap.readthedocs.io/en/latest/index.html

Here we provide an example of using shap with Random Forests.

Shap values are retuened in a slightly different way to logistic regression - there is a set of Shap values for each classification probablility (‘not survive’, ‘survive’) so we need slightly different syntax to access and use the Shap values.

Load data and fit model#

Load modules#

import matplotlib.pyplot as plt

import numpy as np

import pandas as pd

# Import machine learning methods

from sklearn.ensemble import RandomForestClassifier

from sklearn.model_selection import train_test_split

# Import shap for shapley values

import shap # `pip install shap` if neeed

Load data#

The section below downloads pre-processed data, and saves it to a subfolder (from where this code is run). If data has already been downloaded that cell may be skipped.

Code that was used to pre-process the data ready for machine learning may be found at: https://github.com/MichaelAllen1966/1804_python_healthcare/blob/master/titanic/01_preprocessing.ipynb

download_required = True

if download_required:

# Download processed data:

address = 'https://raw.githubusercontent.com/MichaelAllen1966/' + \

'1804_python_healthcare/master/titanic/data/processed_data.csv'

data = pd.read_csv(address)

# Create a data subfolder if one does not already exist

import os

data_directory ='./data/'

if not os.path.exists(data_directory):

os.makedirs(data_directory)

# Save data

data.to_csv(data_directory + 'processed_data.csv', index=False)

data = pd.read_csv('data/processed_data.csv')

# Make all data 'float' type

data = data.astype(float)

Divide into X (features) and y (labels)#

We will separate out our features (the data we use to make a prediction) from our label (what we are truing to predict).

By convention our features are called X (usually upper case to denote multiple features), and the label (survived or not) y.

# Use `survived` field as y, and drop for X

y = data['Survived'] # y = 'survived' column from 'data'

X = data.drop('Survived',axis=1) # X = all 'data' except the 'survived' column

# Drop PassengerId

X.drop('PassengerId',axis=1, inplace=True)

Divide into training and tets sets#

When we test a machine learning model we should always test it on data that has not been used to train the model.

We will use sklearn’s train_test_split method to randomly split the data: 75% for training, and 25% for testing.

X_train, X_test, y_train, y_test = train_test_split(X, y, random_state=42, test_size=0.25)

Fit Random Forest model#

model = RandomForestClassifier(n_estimators=100, n_jobs=-1, class_weight='balanced', random_state=42)

model.fit(X_train, y_train)

RandomForestClassifier(class_weight='balanced', n_jobs=-1, random_state=42)

Predict values and get probabilities of survival#

Now we can use the trained model to predict survival. We will test the accuracy of both the training and test data sets.

# Predict training and test set labels

y_pred_train = model.predict(X_train)

y_pred_test = model.predict(X_test)

# Predict probabilities of survival

y_prob_train = model.predict_proba(X_train)

y_prob_test = model.predict_proba(X_test)

Calculate accuracy#

In this example we will measure accuracy simply as the proportion of passengers where we make the correct prediction. In a later notebook we will look at other measures of accuracy which explore false positives and false negatives in more detail.

accuracy_train = np.mean(y_pred_train == y_train)

accuracy_test = np.mean(y_pred_test == y_test)

print (f'Accuracy of predicting training data = {accuracy_train:0.3f}')

print (f'Accuracy of predicting test data = {accuracy_test:0.3f}')

Accuracy of predicting training data = 0.984

Accuracy of predicting test data = 0.794

Examining the model importances#

features = list(X_train)

feature_importances = model.feature_importances_

importances = pd.DataFrame(index=features)

importances['importance'] = feature_importances

importances['rank'] = importances['importance'].rank(ascending=False).values

importances.sort_values('rank').head()

| importance | rank | |

|---|---|---|

| male | 0.238135 | 1.0 |

| Fare | 0.235720 | 2.0 |

| Age | 0.213044 | 3.0 |

| CabinNumber | 0.051892 | 4.0 |

| Pclass | 0.051791 | 5.0 |

The three most influential features are:

male

Fare

age

Note: random forest importances do not tell us anything about the direction of effect of features (as with random forests, the direction of effect may depend on the value oif other features).

Get Shapley values#

We use the shap_values method from the SHAP library to get Shapley values.

We use the explainer method from the SHAP library to get Shapley values along with other data.

We pass a sample to the explainer to speed up Shap (which can be slow with random forests - these values are used as expected baseline values for features).

check_additivity has been disabled as the fit reported a small differnce between between predicted probability and baseline probability with all Shap values summed.

# Get list of features

features = list(X_train)

# Train explainer on Training set

explainer = shap.TreeExplainer(model, X_train.sample(100))

# Get Shap values (extended version has other data returned as well as shap values)

shapley_values_train_extended = explainer(X_train, check_additivity=False)

shapley_values_train = shapley_values_train_extended.values[:,:,1]

shapley_values_test_extended = explainer(X_test, check_additivity=False)

shapley_values_test = shapley_values_test_extended.values[:,:,1]

# Calculate mean Shapley value for each feature in trainign set

importances['mean_shapley_values'] = np.mean(shapley_values_train, axis=0)

# Calculate mean absolute Shapley value for each feature in trainign set

# This will give us the average importance of each feature

importances['mean_abs_shapley_values'] = np.mean(

np.abs(shapley_values_train),axis=0)

97%|=================== | 1291/1336 [00:15<00:00]

Add Shapley values to coefficient table.

importances.sort_values(by='importance', ascending=False).head()

importances.head(10)

| importance | rank | mean_shapley_values | mean_abs_shapley_values | |

|---|---|---|---|---|

| Pclass | 0.051791 | 5.0 | -0.004146 | 0.060243 |

| Age | 0.213044 | 3.0 | 0.002032 | 0.051654 |

| SibSp | 0.049641 | 6.0 | -0.000486 | 0.018311 |

| Parch | 0.037600 | 7.0 | -0.000857 | 0.013924 |

| Fare | 0.235720 | 2.0 | -0.000917 | 0.061949 |

| AgeImputed | 0.019413 | 8.0 | 0.000780 | 0.016755 |

| EmbarkedImputed | 0.000133 | 23.0 | 0.000013 | 0.000013 |

| CabinLetterImputed | 0.007557 | 13.0 | -0.000580 | 0.005038 |

| CabinNumber | 0.051892 | 4.0 | -0.002880 | 0.029931 |

| CabinNumberImputed | 0.018340 | 9.0 | -0.002088 | 0.021056 |

Get top 10 influenctial features by co-effieceints or Shapley

# Get top 10 features

importance_top_10 = \

importances.sort_values(by='importance', ascending=False).head(10).index

shapley_top_10 = \

importances.sort_values(

by='mean_abs_shapley_values', ascending=False).head(10).index

# Add to DataFrame

top_10_features = pd.DataFrame()

top_10_features['importances'] = importance_top_10.values

top_10_features['Shapley'] = shapley_top_10.values

# Display

top_10_features

| importances | Shapley | |

|---|---|---|

| 0 | male | male |

| 1 | Fare | Fare |

| 2 | Age | Pclass |

| 3 | CabinNumber | Age |

| 4 | Pclass | CabinNumber |

| 5 | SibSp | CabinNumberImputed |

| 6 | Parch | Embarked_S |

| 7 | AgeImputed | SibSp |

| 8 | CabinNumberImputed | Embarked_C |

| 9 | Embarked_S | AgeImputed |

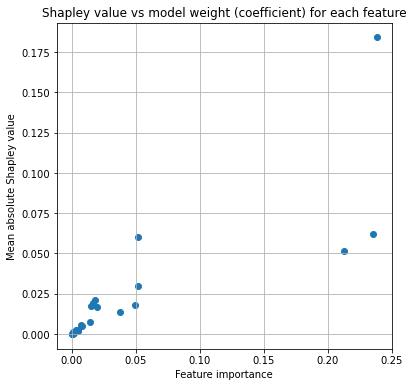

We can see a lot of overlap between the most import fatures as estimated by coefficients and those estimated using mean absolute Shapley values. But they are not identical.

Plot comparison of Shapley and mode coefficients:

fig = plt.figure(figsize=(6,6))

ax = fig.add_subplot(111)

# Plot points

x = importances['importance']

y = importances['mean_abs_shapley_values']

ax.scatter(x, y)

ax.set_title('Shapley value vs model weight (coefficient) for each feature')

ax.set_ylabel('Mean absolute Shapley value')

ax.set_xlabel('Feature importance')

plt.grid()

plt.show()

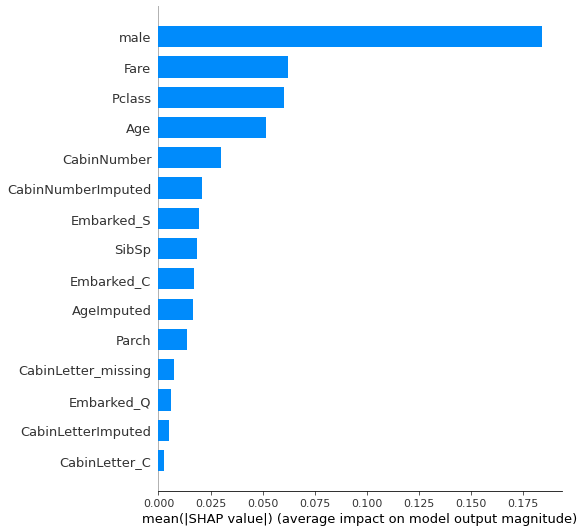

Plots of Shapley values#

Summary plot#

The summary_plot using a plot_type option of bar gives us the overall importance of each feature across the population.

Here we limit the num,ber of features shown to 15 (default is 20).

fig = plt.figure(figsize=(6,6))

shap.summary_plot(shap_values = shapley_values_train,

features = X_train.values,

feature_names = X_train.columns.values,

plot_type='bar',

max_display=15,

show=False)

plt.tight_layout()

plt.show()

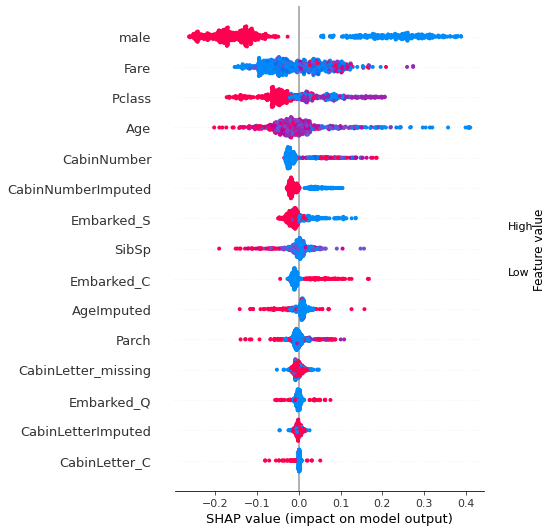

Beeswarm plot#

Without specifying a plot_type option of bar, summary_plot gives us a beeswarm plot, showing the Shapley values for all instances.

fig = plt.figure(figsize=(6,6))

shap.summary_plot(shap_values = shapley_values_train,

features = X_train.values,

feature_names = X_train.columns.values,

max_display=15,

show=False)

plt.tight_layout()

plt.show()

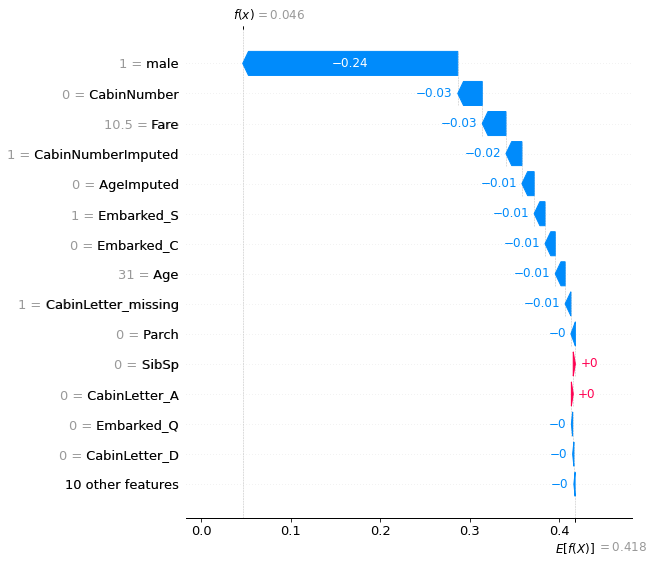

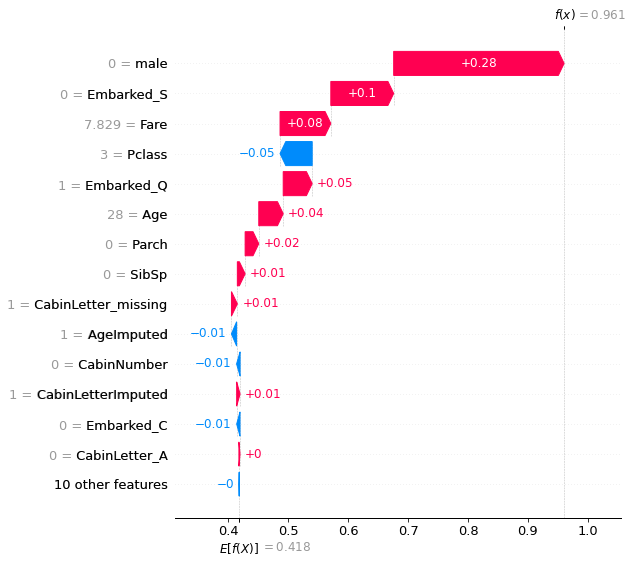

Waterfall plot#

Waterfall plots show the influence of individual features on model prediction. These are shown as the effect on log odds ratio of survival. Log odds ratio are usually shown as these are additive, whereas probabilities are not.

Waterfall plots put the most influential features at the top.

Get locations of passengers with low or high probability of survival.

y_prob = model.predict_proba(X_test)[:,1]

# Get the location of an example each where porbability of giving thrombolysis

# is <0.01 or >0.99

location_low_probability = np.where(y_prob < 0.05)[0][0]

location_high_probability = np.where(y_prob > 0.95)[0][0]

Waterfall plot for passenger with lowest probability of survival:

shap.plots.waterfall(shapley_values_test_extended[location_low_probability][:,1],

max_display=15)

Waterfall plot for passenger with high probability of survival:

shap.plots.waterfall(shapley_values_test_extended[location_high_probability][:,1],

max_display=15)

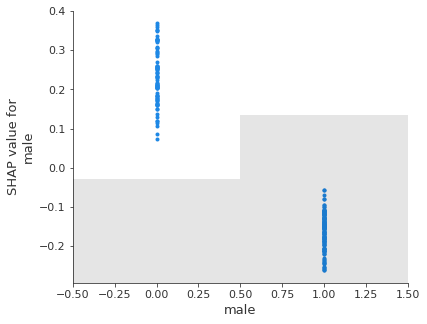

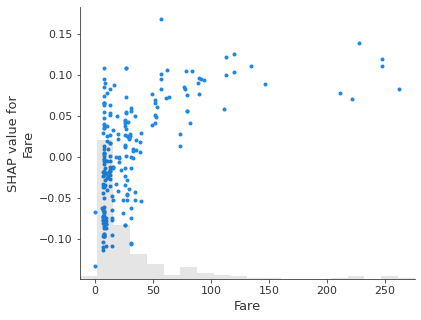

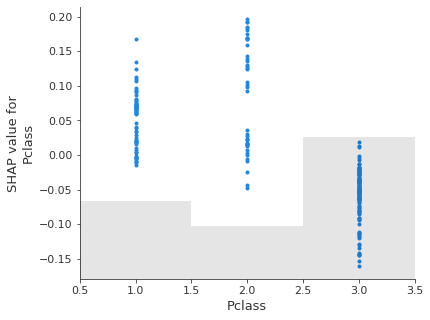

Scatter plot#

A scattter plot for one or more features shows the relationship between the feature value and the Shap value, along with a histogram of the frequency of the feature values.

feat_to_show = shapley_top_10[0:3]

for feat in feat_to_show:

shap.plots.scatter(shapley_values_test_extended[:, feat][:,1], x_jitter=0)

Note: unlike a logistic regression model, Shap values are not linearly releated to feature values. This is because of the more flexible classification method in random forests.